Machine Learning will quite certainly continue to be a hot topic in 2024 (to which extent we would rather not bet! 🙂 ) and the prevalence of the usage of artificial intelligence (AI) for individual stock picks of both individual and institutional investors is here to stay. And we are committed to bringing you new developments and keeping you in the loop. Today, we will review original research from Demirbaga and Xu, (2023) that highlights the critical role of machine learning model execution time (combination of time for ML training and prediction) in empirical asset pricing. The temporal efficiency of machine learning algorithms becomes more pivotal, given the necessity for swift investment decision-making based on the predictions generated from a lot of real-time trading data.

Their study comprehensively evaluates execution time across various models and introduces two time-saving strategies: feature reduction and a reduction in time observations. Notably, XGBoost (short for eXtreme Gradient Boosting, that mitigates inefficiencies by analyzing the feature distribution across all data points within a leaf node and leveraging this insight to narrow the search space for possible feature splits) emerges as a top-performing model, combining high accuracy with relatively low execution time compared to other nonlinear models.

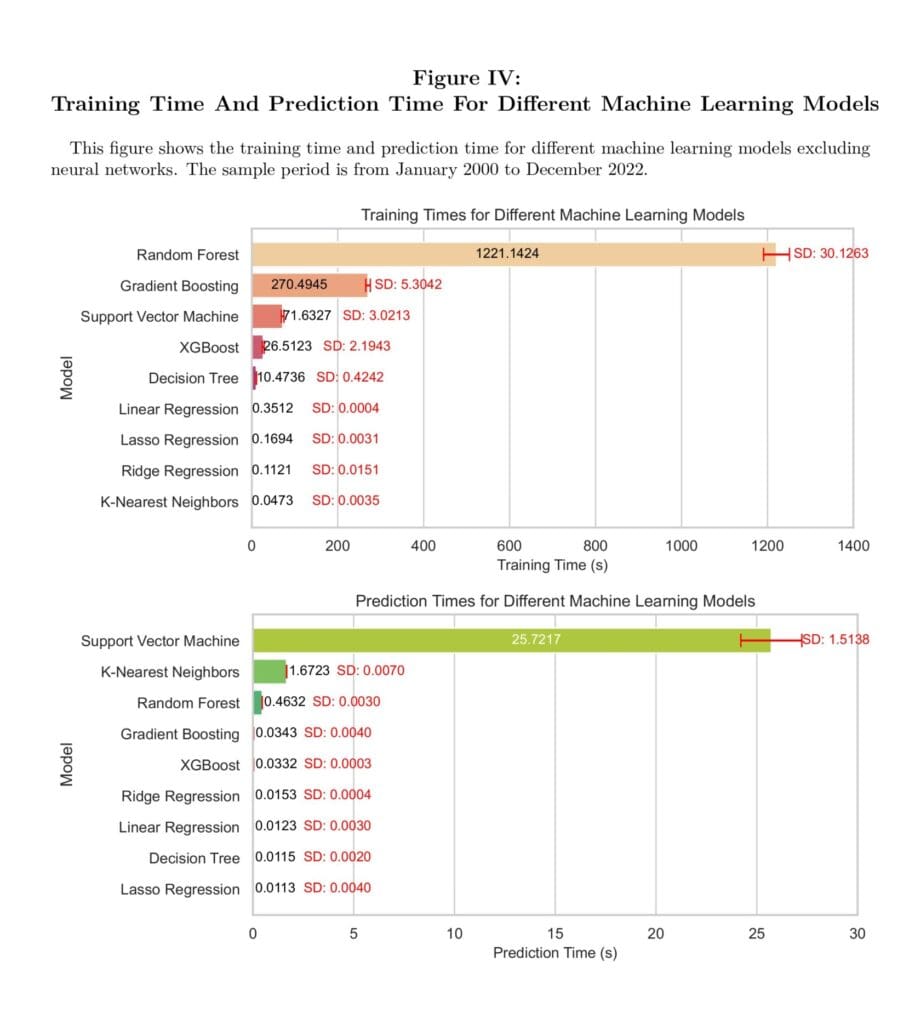

Figure IV provides an ascending-order depiction of training and prediction times for these models. Linear, LASSO and ridge regression exhibit short training and prediction times, falling below 0.5 seconds. XGBoost outperforms gradient boosting in terms of both R2 and overall execution time. While random forest and decision trees exhibit reasonably high R2, their training and prediction times are suboptimal. The primary trade-off between predictive performance and execution time emerges between XGBoost and regression models (linear and ridge). XGBoost surpasses regressions by at least 0.16 in terms of R2, yet the training times of regression models are significantly shorter than XGBoost.

At last, findings underscore the interplay between model accuracy and execution time in enabling good investment decisions. For future studies, exploring additional time-efficient algorithms and assessing execution time in different financial contexts could yield valuable insights. Additionally, future research may explore the daily data for forecasting the next day’s equity returns.

Authors: Umit Demirbaga and Yue Xu

Title: Machine Learning Execution Time in Asset Pricing

Link: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4587923

Abstract:

In the fast-paced world of finance, where timely decisions can yield substantial gains or losses, machine learning models with time-consuming training and prediction may miss crucial market timing opportunities. This study examines the machine learning model execution time including both training and prediction phases, in empirical asset pricing. We conduct a comprehensive analysis of machine learning execution time, examining ten models and introducing two strategies to save time: feature reduction and the reduction of time observations. Our findings reveal that XGBoost stands out as a top performer, demonstrating relatively low execution times compared to other machine learning models, with exceptional accuracy, boasting an out-of-sample R-Squared of 0.78 and a Sharpe ratio of 1.76. Furthermore, feature reduction and shorter time observations reduce execution time by as much as 18 times while also slightly enhancing investment performance. This research underscores the vital interplay between model accuracy and execution time to make accurate and prompt investment decisions in practice.

As always, we present several exciting figures and tables:

Notable quotations from the academic research paper:

“Time is an invaluable resource in the fast-paced world of finance, where timely decisions can lead to substantial gains or losses. The rapid evolution of financial markets demands real-time responses, and machine learning models that consume excessive time for training and prediction might miss critical market timing opportunities. Machine learning models that exhibit prolonged times may compromise the timeliness of decision-making, rendering their valuable insights less actionable.

Beyond market timing, the execution time of machine learning models carries economic implications. The cost of time encompasses multiple dimensions, including labor costs for analysts and the expenses associated with running resource-intensive computations.

This paper conducts a comprehensive evaluation of machine learning execution time in empirical asset pricing. We conduct a comparable analysis of execution time for a diverse set of machine learning models. Additionally, we investigate two strategies to reduce execution time: first, by reducing the number of characteristics, and second, by reducing training and prediction time observations.

We implement ten distinct machine learning algorithms, using a comprehensive set of monthly stock-level characteristics. Among these algorithms, XGBoost exhibits the highest predictive performance, as evident from its superior out-of-sample R2 and mean squared errors. Additionally, the long-short portfolio constructed using XGBoost demonstrates the highest Sharpe ratio, attaining a remarkable 1.76. While linear, lasso, and ridge regressions exhibit the shortest training times, each requiring less than 0.4 seconds, XGBoost also showcases a relatively efficient training time of 26.51 seconds, significantly outperforming random forest (1221.14 seconds) and gradient boosting (270.49 seconds). In terms of prediction time, XGBoost is similar to regression models. These results highlight the primary tradeoff between investment performance and training time lies between XGBoost and regression models. However, despite regressions’ advantage in training time, their lower predictive accuracy and inferior investment performance suggest that regressions may not be the ideal choice in real investment decisions.

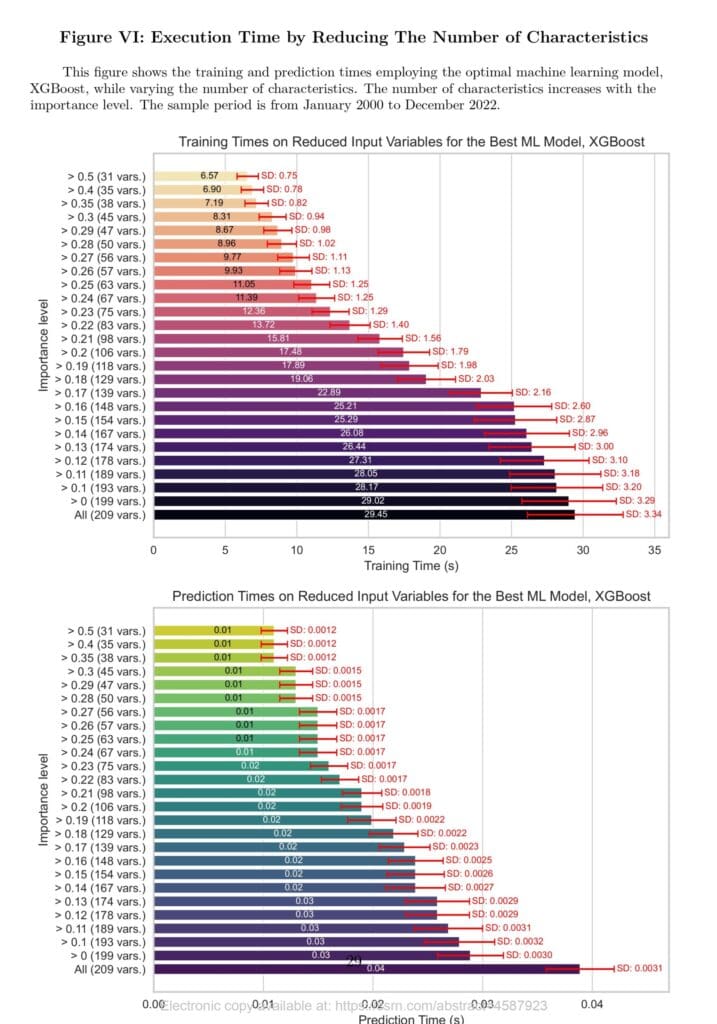

We narrow our attention to XGBoost as our primary machine learning model due to its superior predictive accuracy and investment performance compared to other models. To further enhance the time efficiency of machine learning algorithms, we employ our first method: reducing the number of characteristics based on their importance in prediction. Notably, characteristics such as the 52-week high, supplier momentum, maximum return over a month, and idiosyncratic risk are identified as having the highest importance, while others like unexpected R&D increases and spinoffs are found unimportant and consequently removed from consideration.

Furthermore, we investigate the reduction of time observations to enhance machine learning time efficiency. Our findings illustrate that employing the most recent four years of monthly data observations results in a remarkable 18-fold reduction in training time and a threefold reduction in prediction times compared to using a historical dataset spanning six decades. Interestingly, the Sharpe ratio exhibits a slight improvement when employing recent four-year observations in contrast to the longer 60-year dataset. This suggests that data spanning six decades may not hold significant practical value for training machine learning models. Instead, shorter time periods prove to be more informative, indicating that financial practitioners should consider training models within shorter time frames, enabling timely predictions and responses to evolving market dynamics.

Our finding reveals that the XGBoost machine learning model exhibits a distinct advantage in terms of execution time without compromising predictive accuracy. Moreover, we introduce two efficient methods aimed at time-saving: eliminating redundant characteristics and reducing the time observations in the training dataset. Our primary contribution is to document the significance of execution time as the financial landscape increasingly harnesses machine learning’s potential for predicting asset prices. While accuracy remains paramount, a model’s ability to deliver timely forecasts can significantly influence its practical applicability and impact on decision-making. By factoring in the training and prediction times of machine learning models, researchers and practitioners can find the most appropriate model that unlock actionable insights while navigating the intricacies of real-time decision-making.

The prediction time illustrated in Figure VI follows a pattern akin to the training time. Prediction times remain below 0.02 seconds for datasets comprising variables with an importance score exceeding 0.24. As more features are incorporated, prediction times range from 0.03 seconds to 0.04 seconds. This aligns with the observation from the benchmark models in Figure IV, where prediction times are notably shorter than training times.

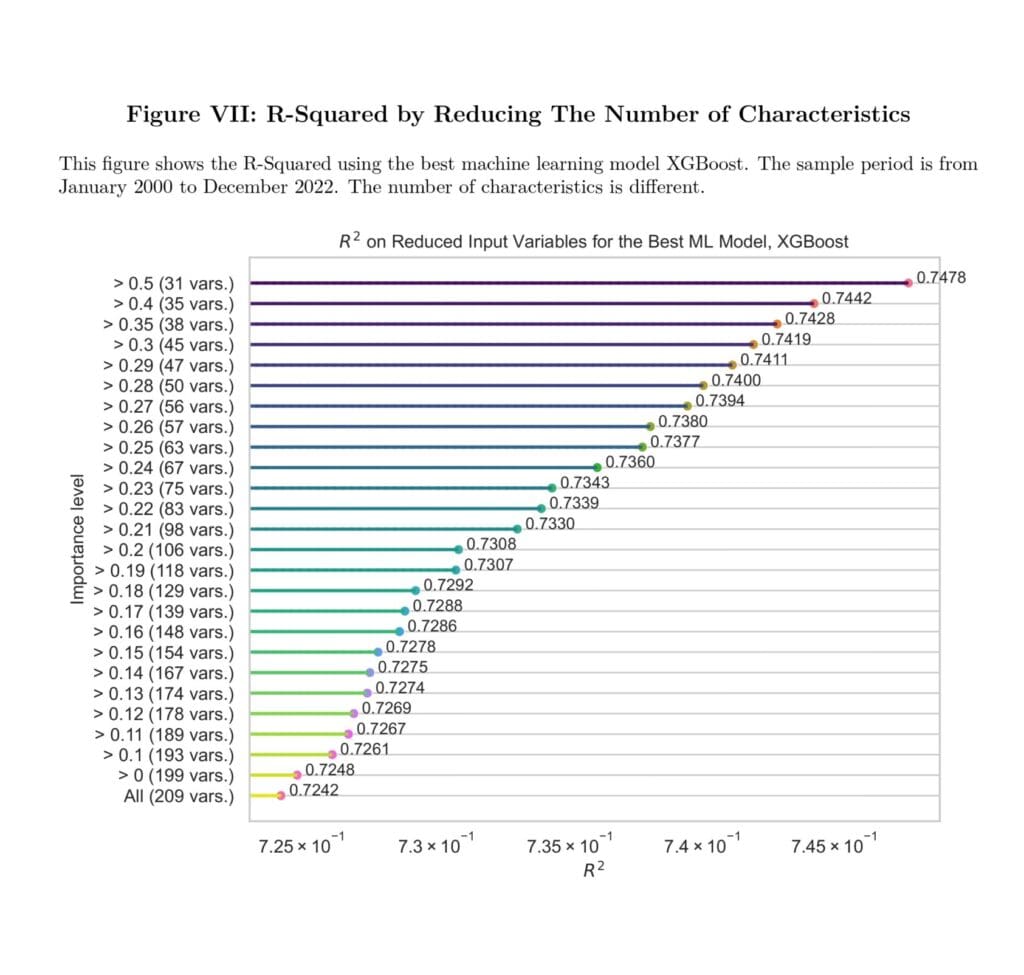

Figure VII shows the out-of-sample R2 obtained through the reduction of the number of characteristics, guided by their importance levels. To facilitate a more intuitive observation of the slight differences between R2, we employ a logarithmic scale for data visualization. Notably, as we include only the important characteristics, there is a discernible increase in R2, albeit of modest magnitude. This observation underscores the beneficial impact of reducing irrelevant characteristics within machine learning models, ultimately enhancing their predictive performance.”

Are you looking for more strategies to read about? Sign up for our newsletter or visit our Blog or Screener.

Do you want to learn more about Quantpedia Premium service? Check how Quantpedia works, our mission and Premium pricing offer.

Do you want to learn more about Quantpedia Pro service? Check its description, watch videos, review reporting capabilities and visit our pricing offer.

Are you looking for historical data or backtesting platforms? Check our list of Algo Trading Discounts.

Or follow us on:

Facebook Group, Facebook Page, Twitter, Linkedin, Medium or Youtube

Share onLinkedInTwitterFacebookRefer to a friend