Top Models for Natural Language Understanding (NLU) Usage

In recent years, the Transformer architecture has experienced extensive adoption in the fields of Natural Language Processing (NLP) and Natural Language Understanding (NLU). Google AI Research’s introduction of Bidirectional Encoder Representations from Transformers (BERT) in 2018 set remarkable new standards in NLP. Since then, BERT has paved the way for even more advanced and improved models. [1]

We discussed the BERT model in our previous article. Here we would like to list alternatives for all of the readers that are considering running a project using some large language model (as we do 😀 ), would like to avoid ChatGPT, and would like to see all of the alternatives in one place. So, presented here is a compilation of the most notable alternatives to the widely recognized language model BERT, specifically designed for Natural Language Understanding (NLU) projects.

Keep in mind that the ease of computing can still depend on factors like model size, hardware specifications, and the specific NLP task at hand. However, the models listed below are generally known for their improved efficiency compared to the original BERT model.

Models overview:

- DistilBERT

This is a distilled version of BERT, which retains much of BERT’s performance while being lighter and faster.

- ALBERT (A Lite BERT)

ALBERT introduces parameter-reduction techniques to reduce the model’s size while maintaining its performance.

- RoBERTa

Based on BERT, RoBERTa optimizes the training process and achieves better results with fewer training steps.

- ELECTRA

ELECTRA replaces the traditional masked language model pre-training objective with a more computationally efficient approach, making it faster than BERT.

- T5 (Text-to-Text Transfer Transformer)

T5 frames all NLP tasks as text-to-text problems, making it more straightforward and efficient for different tasks.

- GPT-2 and GPT-3

While larger than BERT, these models have shown impressive results and can be efficient for certain use cases due to their generative nature.

- DistillGPT-2 and DistillGPT-3

Like DistilBERT, these models are distilled versions of GPT-2 and GPT-3, offering a balance between efficiency and performance.

More details:

DistilBERT

DistilBERT is a compact and efficient version of the BERT (Bidirectional Encoder Representations from Transformers) language model. Introduced by researchers at Hugging Face, DistilBERT retains much of BERT’s language understanding capabilities while significantly reducing its size and computational requirements. This achievement is accomplished through a process called “distillation,” where the knowledge from the larger BERT model is transferred to a smaller architecture. [2] [3]

Figure 1. The DistilBERT model architecture and components. [4]

By compressing the original BERT model, DistilBERT becomes faster and requires less memory to operate, making it more practical for various natural language processing tasks. Despite its smaller size, DistilBERT achieves remarkable performance, making it an attractive option for applications with limited computational resources or where speed is a crucial factor. It has been widely adopted for a range of NLP tasks, proving to be an effective and efficient alternative to its larger predecessor. [2] [3]

ALBERT (A Lite BERT)

ALBERT, short for “A Lite BERT,” is a groundbreaking language model introduced by Google Research. It aims to make large-scale language models more computationally efficient and accessible. The key innovation in ALBERT lies in its parameter-reduction techniques, which significantly reduce the number of model parameters without sacrificing performance.

Unlike BERT, which uses traditional word embeddings, ALBERT utilizes sentence-order embeddings to create context-aware representations. Additionally, it incorporates cross-layer parameter sharing, meaning that certain model layers share parameters, further reducing the model’s size.

![ALBERT adjusts from BERT, and ELBERT changes from ALBERT. Compared with ALBERT, ELBERT has no more extra parameters, and the calculation amount of early exit mechanism is also ignorable [29] (less than 2% of one encoder).](https://www.researchgate.net/publication/365448424/figure/fig1/AS:11431281097639424@1668654791155/ALBERT-adjusts-from-BERT-and-ELBERT-changes-from-ALBERT-Compared-with-ALBERT-ELBERT.png)

Figure 2. ALBERT adjusts from BERT, and ELBERT changes from ALBERT. Compared with ALBERT, ELBERT has no more extra parameters, and the calculation amount of early exit mechanism is also ignorable (less than 2% of one encoder). [6]

By reducing the number of parameters and introducing these innovative techniques, ALBERT achieves similar or even better performance than BERT on various natural language understanding tasks while requiring fewer computational resources. This makes ALBERT a compelling option for applications where resource efficiency is critical, enabling more widespread adoption of large-scale language models in real-world scenarios. [5]

RoBERTa

RoBERTa (A Robustly Optimized BERT Pretraining Approach) is an advanced language model introduced by Facebook AI. It builds upon the architecture of BERT but undergoes a more extensive and optimized pretraining process. During pretraining, RoBERTa uses larger batch sizes, more data, and removes the next sentence prediction task, resulting in improved representations of language. The training optimizations lead to better generalization and understanding of language, allowing RoBERTa to outperform BERT on various natural language processing tasks. It excels in tasks like text classification, question-answering, and language generation, demonstrating state-of-the-art performance on benchmark datasets.

RoBERTa’s success can be attributed to its focus on hyperparameter tuning and data augmentation during pretraining. By maximizing the model’s exposure to diverse linguistic patterns, RoBERTa achieves higher levels of robustness and performance. The model’s effectiveness, along with its ease of use and compatibility with existing BERT-based applications, has made RoBERTa a popular choice for researchers and developers in the NLP community. Its ability to extract and comprehend intricate language features makes it a valuable tool for various language understanding tasks. [7]

ELECTRA

ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately) is a novel language model proposed by researchers at Google Research. Unlike traditional masked language models like BERT, ELECTRA introduces a more efficient pretraining process. In ELECTRA, a portion of the input tokens is replaced with plausible alternatives generated by another neural network called the “discriminator.” The main encoder network is then trained to predict whether each token was replaced or not. This process helps the model learn more efficiently as it focuses on discriminating between genuine and replaced tokens.

By employing this “replaced-token detection” task during pretraining, ELECTRA achieves better performance with fewer parameters compared to BERT. It also allows for faster training and inference times due to its reduced computational requirements. ELECTRA has demonstrated impressive results on various natural language understanding tasks, showcasing its capability to outperform traditional masked language models. Its efficiency and improved performance make it a noteworthy addition to the landscape of advanced language models. [8]

T5 (Text-to-Text Transfer Transformer)

T5 (Text-to-Text Transfer Transformer) is a state-of-the-art language model introduced by Google Research. Unlike traditional language models that are designed for specific tasks, T5 adopts a unified “text-to-text” framework. It formulates all NLP tasks as a text-to-text problem, where both the input and output are treated as text strings.By using this text-to-text approach, T5 can handle a wide range of tasks, including text classification, translation, question-answering, summarization, and more. This flexibility is achieved by providing task-specific prefixes to the input text during training and decoding.

Figure 3. Diagram of our text-to-text framework. Every task we consider uses text as input to the model, which is trained to generate some target text. This allows us to use the same model, loss function, and hyperparameters across our diverse set of tasks including translation (green), linguistic acceptability (red), sentence similarity (yellow), and document summarization (blue). It also provides a standard testbed for the methods included in our empirical survey. [9]

T5’s unified architecture simplifies the model’s design and makes it easier to adapt to new tasks without extensive modifications or fine-tuning. Additionally, it enables efficient multitask training, where the model is simultaneously trained on multiple NLP tasks, leading to better overall performance.T5’s versatility and superior performance on various benchmarks have made it a popular choice among researchers and practitioners for a wide range of natural language processing tasks. Its ability to handle diverse tasks within a single framework makes it a powerful tool in the NLP community. [9]

GPT-2 and GPT-3

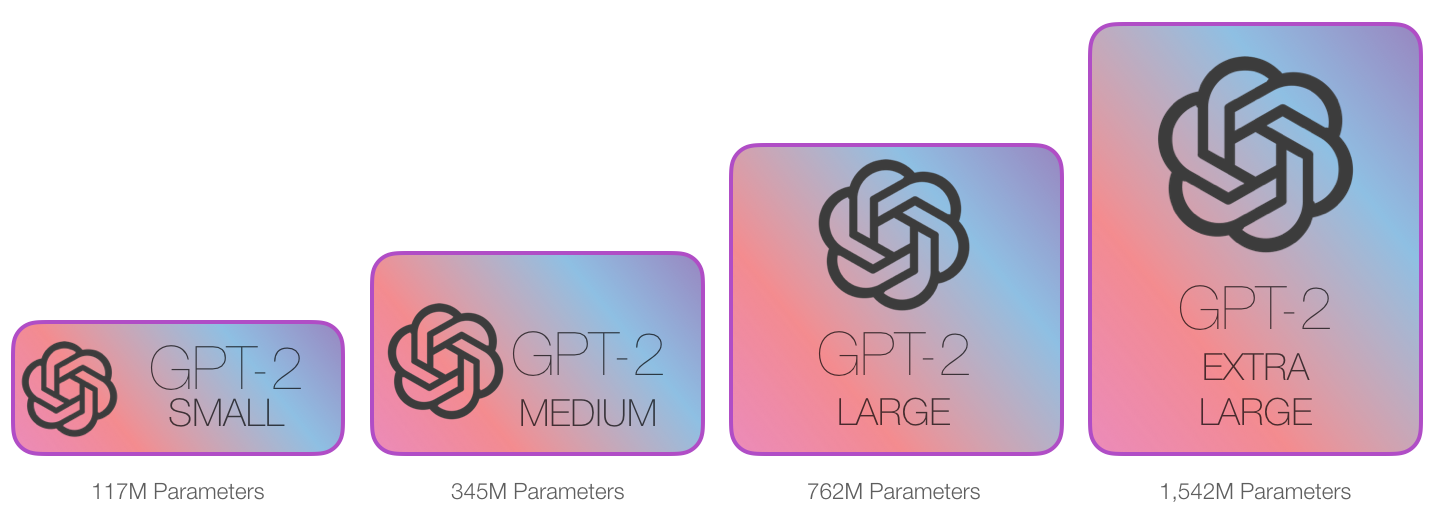

GPT-2 and GPT-3 are cutting-edge language models developed by OpenAI, based on the Generative Pre-trained Transformer (GPT) architecture.[10]

-

GPT-2: GPT-2, released in 2019, is a large-scale language model with 1.5 billion parameters. It utilizes a transformer-based architecture and is trained in an unsupervised manner on a vast corpus of text from the internet. GPT-2 is capable of generating coherent and contextually relevant text, making it a significant advancement in natural language generation. It has been used in various creative applications, text completion, and generating human-like responses in chatbots.

-

GPT-3: GPT-3, released in 2020, is the successor to GPT-2 and is the largest language model to date, with a staggering 175 billion parameters. Its enormous size enables it to exhibit even more impressive language understanding and generation capabilities. GPT-3 can perform a wide array of tasks, including language translation, question-answering, text summarization, and even writing code, without requiring fine-tuning on task-specific data. It has attracted significant attention for its ability to exhibit human-like reasoning and language comprehension.

Figure 4. Types of GPT-2 [11]

Figure 4. Types of GPT-2 [11]

Both GPT-2 and GPT-3 have pushed the boundaries of natural language processing and represent significant milestones in the development of large-scale language models. Their versatility and proficiency in various language-related tasks have opened up new possibilities for applications in industries ranging from chatbots and customer support to creative writing and education. [10]

DistillGPT-2 and DistillGPT-3

DistilGPT2, short for Distilled-GPT2, is an English-language model based on the Generative Pre-trained Transformer 2 (GPT-2) architecture. It is a compressed version of GPT-2, developed using knowledge distillation to create a more efficient model. DistilGPT2 is pre-trained with the supervision of the 124 million parameter version of GPT-2 but has only 82 million parameters, making it faster and lighter. [12]

Just like its larger counterpart, GPT-2, DistilGPT2 can be used to generate text. However, users should also refer to information about GPT-2’s design, training, and limitations when working with this model.

Model Details:

- Developed by: Hugging Face

- Model type: Transformer-based Language Model

- Language: English

- License: Apache 2.0

For more information about DistilGPT2 see the page: distilgpt2 · Hugging Face

Distillation refers to a process where a large and complex language model (like GPT-3) is used to train a smaller and more efficient version of the same model. The goal is to transfer the knowledge and capabilities of the larger model to the smaller one, making it more computationally friendly while maintaining a significant portion of the original model’s performance.

The “Distilled” prefix is often used in the names of these smaller models to indicate that they are distilled versions of the larger models. For example, “DistilBERT” is a distilled version of the BERT model, and “DistilGPT-2” is a distilled version of the GPT-2 model. These models are created to be more efficient and faster while still maintaining useful language understanding capabilities.

Researchers or developers have experimented with the concept of distillation to create more efficient versions of GPT-3. However, please note that the availability and specifics of such models may vary, and it’s always best to refer to the latest research and official sources for the most up-to-date information on language models.

[1] Top Ten BERT Alternatives For NLU Projects (analyticsindiamag.com)

[2] DistilBERT (huggingface.co)

[3] Sanh, Victor, et al. “DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter.” arXiv preprint arXiv:1910.01108 (2019).

[4] The DistilBERT model architecture and components. | Download Scientific Diagram (researchgate.net)

[6] ea88386e.webp (1280×710) (morioh.com)

[7] roberta-large · Hugging Face

[10] GPT-2 (GPT2) vs. GPT-3 (GPT3): The OpenAI Showdown – DZone

[12] distilgpt2 · Hugging Face

Author:

Lukas Zelieska, Quant Analyst

Are you looking for more strategies to read about? Sign up for our newsletter or visit our Blog or Screener.

Do you want to learn more about Quantpedia Premium service? Check how Quantpedia works, our mission and Premium pricing offer.

Do you want to learn more about Quantpedia Pro service? Check its description, watch videos, review reporting capabilities and visit our pricing offer.

Are you looking for historical data or backtesting platforms? Check our list of Algo Trading Discounts.

Would you like free access to our services? Then, open an account with Lightspeed and enjoy one year of Quantpedia Premium at no cost.

Or follow us on:

Facebook Group, Facebook Page, Twitter, Linkedin, Medium or Youtube

Share onLinkedInTwitterFacebookRefer to a friend