Can We Finally Use ChatGPT as a Quantitative Analyst?

In two of our previous articles, we explored the idea of using artificial intelligence to backtest trading strategies. Since then, AI has continued to develop, with tools like ChatGPT evolving from simple Q&A assistants into more complex tools that may aid in developing and testing investment strategies—at least, according to some of the more optimistic voices in the field. Over a year has passed since our first experiments, and with all the current hype around the usefulness of large language models (LLMs), we believe it’s the right time to critically revisit this topic. Therefore, our goal is to evaluate how well today’s AI models can perform as quasi-junior quantitative analysts—highlighting not only the promising use cases but also the limitations that still remain.

Model selection

First, we needed to select a model suitable for the task. We explored the options of using Claude AI, Gemini Advanced (formerly Deep Research), and ChatGPT, as these are some of the most widely used AI tools today. Progress in the AI models goes really fast; some of them are better in selective sub-tasks, and others are worse; however, from our perspective, we have not seen significant differences between them. Therefore, based on our needs – data imputation, code interpretation, and reasoning, we chose ChatGPT as a primary tool in which performed our analysis. When deciding which specific version to use, we selected the GPT-4o model, as it proved to be the most versatile overall. We also considered the GPT-4.5 model (which is advertised by the OpenAI as better model for analytical tasks), but since it is expected to be depreciated soon, we felt this article wouldn’t offer lasting relevance if based on it.

What we want to accomplish

As the title of this article suggests, our goal was to find out whether the process of creating a trading strategy can be assisted by AI, or if not the whole process, then if at least some part of the process can be outsourced to the AI and and if we still can trust the results. For that, we decided to stick to the simple model – we worked with ChatGPT and asked him to assist us in creating an asset allocation strategy using three assets – equities, fixed income and commodities.

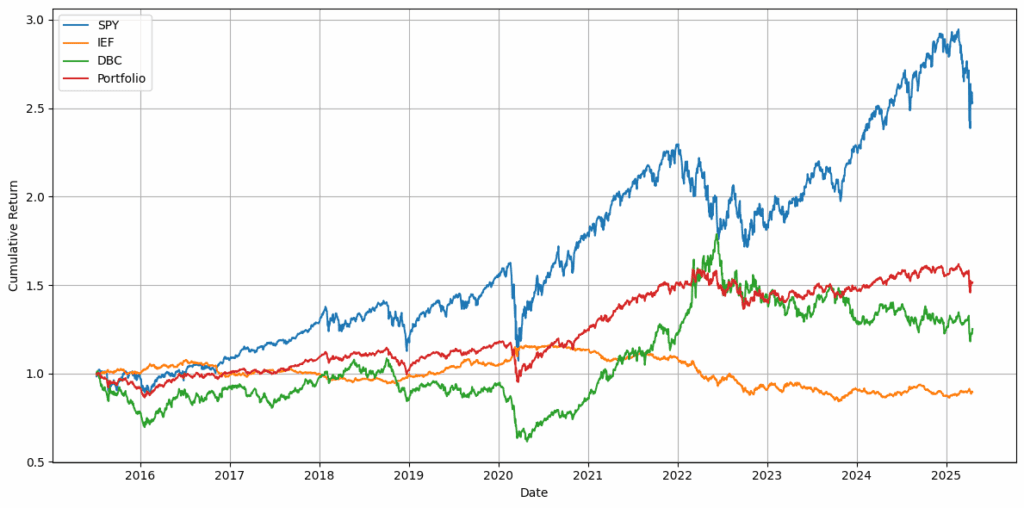

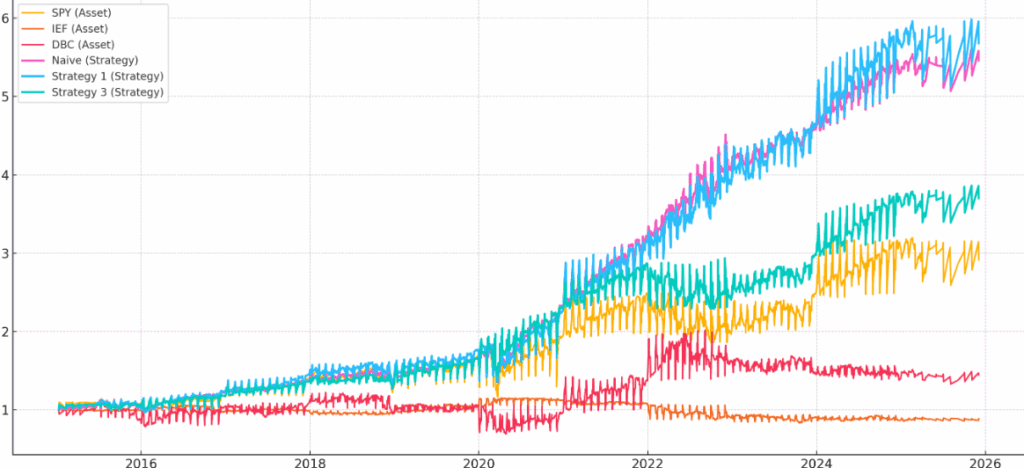

Our tests were performed on data from 07.07.2015 to 17.04.2025 for SPY (SPDR S&P 500 ETF Trust), IEF (iShares 7-10 Year Treasury Bond ETF) and DBC (Invesco DB Commodity Index Tracking Fund) as investment universe.

First iterations

When the data were prepared (we ran into some issues, but we will summarize them later), implementing a simple trading strategy, like fixed-percentage allocation, was a relatively easy task. Simple strategies involve assigning a fixed portion of capital to different assets, regardless of market conditions. For example, you might allocate 60% to stocks, 30% to bonds, and 10% to commodities. In code, this just means multiplying each asset’s return by its target weight and summing them up to get the portfolio return. You don’t need complex indicators or dynamic rebalancing, just basic arithmetic operations on time series data. This kind of strategy is ideal for the start of AI automation and testing because the logic is straightforward and can be applied consistently over the dataset.

The AI model also does a little bit more. Not only can it write code for such a basic strategy, but it can suggest some of them on its own. Therefore, we started with a naive strategy and asked AI to suggest us modification of allocation ratios, which are rational and reasonable and suggest us strategies, that are more profitable in terms of returns, Sharpe ratio and Calmar ratio.

Suggestions

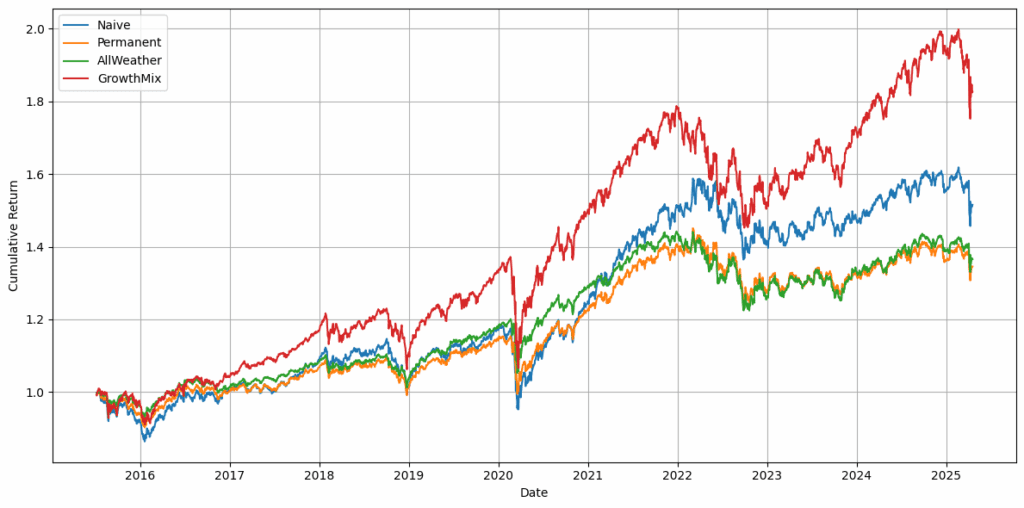

After running the basic fixed asset allocation strategies and checking their performance, the next step was clear: can we do better? It’s one thing to create a simple portfolio with fixed weights, but markets are rarely that cooperative. So we asked ChatGPT not just to test the naive strategy (and variations) but also to help come up with reasonable modifications that might improve the results without making the whole thing overly complicated.

This is where things get more interesting. Instead of just assigning static weights, we explored small variations: what happens if we shift a bit more into bonds during rough periods or slightly increase equity exposure in strong uptrends? We deliberately avoided jumping into complex machine-learning models or regime-switching techniques. The goal here was modest – introduce just enough structure to reflect real-world thinking, like adapting to recent performance or volatility. ChatGPT could handle that, (once again, not without problems), but in the end, it was able to suggest ways to re-weight the portfolio or apply basic filters to avoid major drawdowns. As a result of these prompts, we obtained the following equity curves:

Combining and optimising

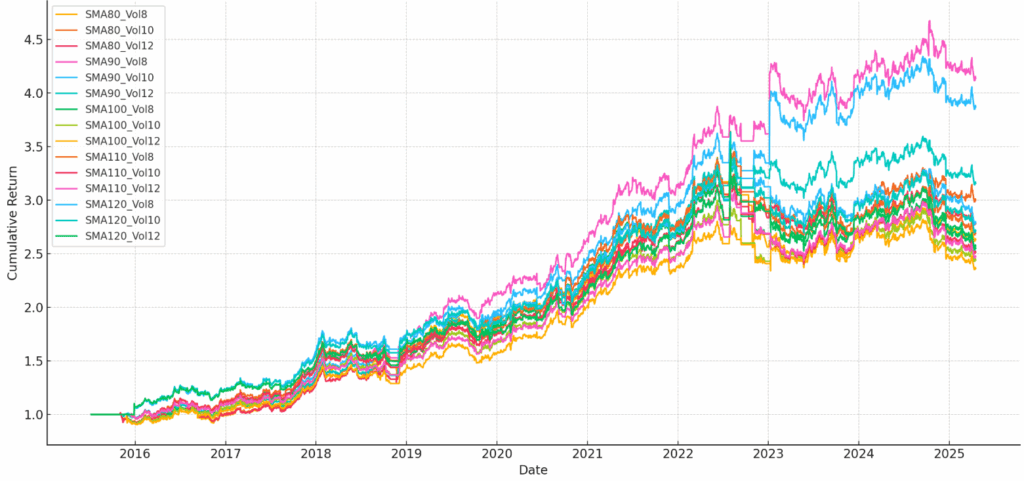

Once we saw that active asset allocation strategies could improve performance, the next challenge was to find a more balanced strategy – one that not only performs well on paper but also feels robust and sensible. It’s easy to get caught up in tuning parameters and choosing the best period for indicators to squeeze out a slightly higher Sharpe ratio, but there’s always a trade-off. A strategy that looks great in one period might fall apart in another.

To explore this, we asked ChatGPT to help us test different versions of the strategy by adjusting key parameters – in our case, mostly timeframes. The idea wasn’t to blindly optimize for the best result but to understand how sensitive the strategy is to changes. If small shifts in a parameter lead to big swings in performance, that’s a red flag.

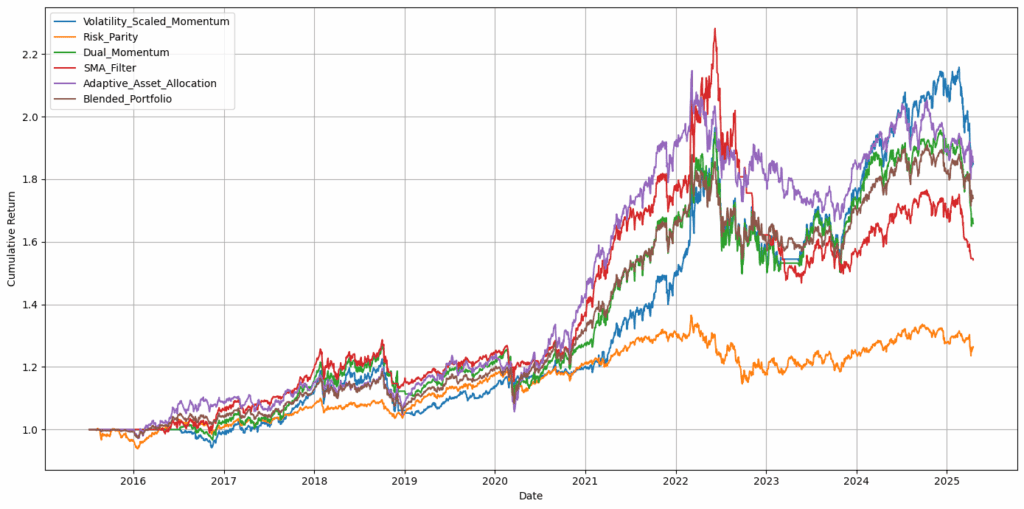

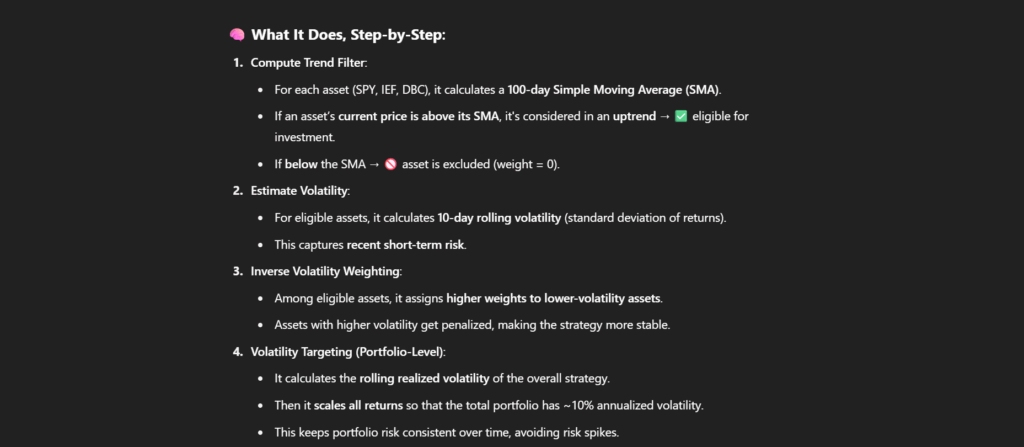

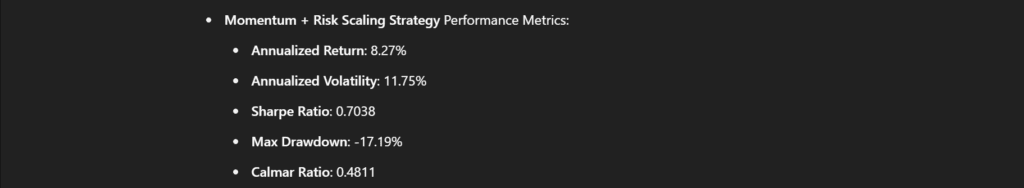

Final iteration of the Asset Allocation Strategy According to ChatGPT is as follows:

Described strategy has the following properties:

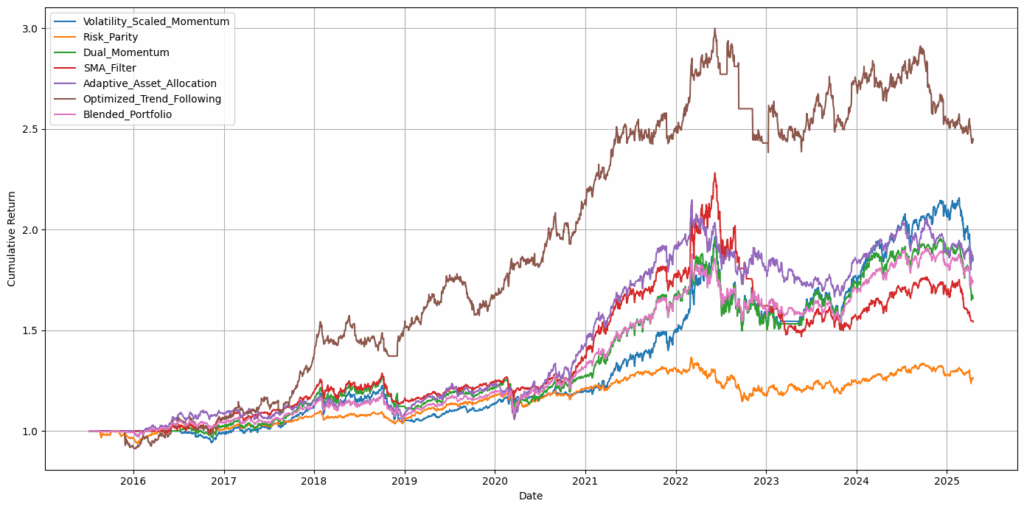

| Strategy | Annualized Return | Annualized Volatility | Sharpe Ratio | Max Drawdown | Calmar Ratio |

|---|---|---|---|---|---|

| Volatility Scaled Momentum | 6.49% | 11.93% | 0.5440 | -23.19% | 0.2800 |

| Risk Parity | 2.43% | 6.59% | 0.3686 | -16.07% | 0.1511 |

| Dual Momentum | 5.32% | 11.86% | 0.4484 | -23.19% | 0.2292 |

| SMA Filter | 4.54% | 11.46% | 0.3959 | -35.65% | 0.1272 |

| Adaptive Asset Allocation | 6.53% | 11.28% | 0.5790 | -22.41% | 0.2915 |

| Optimized Trend Following | 9.58% | 12.79% | 0.7491 | -20.55% | 0.4663 |

| Blended Portfolio | 5.83% | 9.38% | 0.6220 | -18.13% | 0.3217 |

And these are equity curves of suggested active strategies and final strategy (brown):

And here is the result of the AI performing the robustness tests to make sure that the parameter windows we used, like lookback periods or rebalancing intervals, weren’t just conveniently chosen values that happened to produce exceptional results by chance.

What went good

So far, it seems a happy story, right? We asked ChatGPT for the strategy, and in the end, we got one. It’s definitely a significant upgrade when we compare the whole process with the analysis we performed approximately 18 months ago. ChatGPT orientates itself well in quant finance and can suggest a lot of variations for the asset allocation strategies and then always come up with suggestions for the next steps in the analysis. The exploratory part of the quant analysis is well-handled. ChatGPT is an AI chatbot, and as such, it can communicate a lot of ideas and discuss them eloquently.

However, here comes the catch – it’s still a chatbot, not a data analyst, and the chatbot’s primary focus is to make you happy with the “chatting.” What does it mean? It tends to be over-optimistic and sycophantic – it doesn’t “think”, it answers questions and tries to make you willing to continue in the conversation. A lot of the time, ChatGPT presented its ideas or analysis and made extremely naive mistakes in it; however, it presented results as the best strategy/idea ever in existence. The constant re-checking of the individual steps in the analysis was really tiring.

What went wrong

So, what were the issues we encountered, and what should you pay attention to when you experiment with chatbots as assistants in quantitative finance?

Data preparation

We encountered a few issues when working with data. Initially, we tried to obtain the data directly from the internet via ChatGPT, but that wasn’t possible-so we had to provide the data ourselves. This led to some unexpected problems. Since we used dates in the format DD.MM.YYYY and numbers with a comma as the decimal separator, ChatGPT really struggled to interpret the data correctly. The most reliable approach turned out to be providing the data in a format that ChatGPT is more familiar with-typically using YYYY-MM-DD for dates and a dot as the decimal point. Preparing the dataset in this way will make the interaction smoother and reduce misunderstandings during analysis.

Data corruption

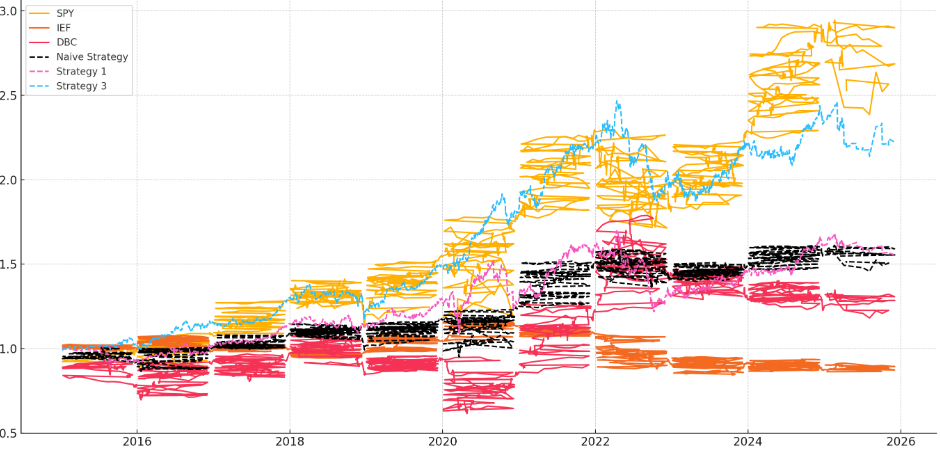

After running multiple models on the inputted dataset, we experienced a few issues. In some cases, the order of the data changed unexpectedly; in others, entire sections of data were lost. This led to outputs that were clearly incorrect or inconsistent with what we expected. The results looked like this:

This issue is closely related to how memory works when handling our data. We frequently had to re-upload the same dataset, as it was either forgotten during the analysis process or became corrupted in various ways (and we did not understand the reason for corruption). This will make it harder in the future to maintain consistency across tests and highlights the limitations of working with larger datasets in this kind of setup.

In the end, if you would like to do your own test analysis, we would definitely recommend providing a chatbot with your own data. As ChatGPT tends to make mistakes in the initial data handling, if you rely on the data from ChatGPT itself, you would not be able to catch some of the mistakes it makes.

Need for validation

When using AI to create a strategy, you often want to plot equity curves, calculate basic performance metrics, and so on. However, the model may interpret these tasks in its own way, which doesn’t always match your expectations. Sometimes the issues are obvious at first glance, but more often, you need to inspect the code carefully. The most common mistakes usually occur in data formatting, the implementation of the strategy function, and how returns, risk, and drawdowns are calculated.

Another related issue is overpromising on the theoretical side while underdelivering in the actual code. This often means that the model describes, for example, a strategy consisting of three rules applied to a dataset, but only implements two of them. In our case, the strategy was supposed to incorporate momentum, volatility, and correlations. However, correlations were not used in the implementation.

Hallucinations

In the context of AI, it typically refers to when a model generates information that is factually incorrect or fabricated, even though it may sound plausible.

ChatGPT

In our case, we were exploring multiple strategies at once and aimed to analyze just the performance of the most successful among them. This setup increased the risk of errors going unnoticed-especially when the model appeared to execute each step correctly, but had actually skipped or misapplied parts of the strategy logic. Without careful review, these inconsistencies can lead to misleading conclusions about a strategy’s effectiveness.

When we obtained the code for this strategy and ran our own analysis, the results we got were significantly different.

| Metric | Value |

|---|---|

| Annualized Return | 1.74% |

| Annualized Volatility | 2.58% |

| Sharpe Ratio | 0.6760 |

| Max Drawdown | -7.89% |

| Calmar Ratio | 0.2208 |

After uploading the data into the model a second time, the results it produced matched our own. How the ChatGPT calculated better ratios in the first time? And why were they different? We have no idea.

This brought us back to an important part of the process – we (users, humans) have to validate results in each step of the analysis. No matter how small or insignificant step it seems. It is absolutely crucial. ChatGPT sometimes produces absolutely made-up numbers (even when the code it suggests for calculation of those numbers is correct).

Cyclic conversations

When we discovered errors in the calculated performance metrics, we wanted to understand why they occurred. After a few follow-up prompts, the model circled around various explanations-differences in data, discrepancies in the structure of the strategy, or adjustments to its parameters. However, we pointed out (correctly) that none of these applied, since we had simply run the exact code provided by ChatGPT on the same dataset we had initially supplied. Even after asking the model to re-run its code on the same input, we found ourselves in a loop, where the AI continued to deflect the issue rather than acknowledge or correct the faulty calculations. This experience illustrates a key limitation of using AI to debug or test a strategy: while it may seem confident, it doesn’t always reliably trace the root of its own mistakes.

If we take a step back and use AI just for brainstorming strategy ideas, we may encounter a similar issue. The model often gets stuck on one basic concept and tends to build everything around it. For example, if we begin with a strategy that involves selecting the top N assets based on a certain criterion, the model may continue to suggest only variations that treat this selection step as essential. Unless we explicitly state that we want to avoid using that criterion, it will likely remain a core part of every new proposal. This highlights a common limitation: AI tends to anchor on the initial direction and struggles to explore entirely different ideas unless firmly guided to do so.

Tendention to over-optimization

ChatGPT, as an analyst, tends to be an optimization machine. Suggestions it gives, or ideas it presents as worthwhile to investigate tend to add degrees of freedom into the strategy, and as such, the strategy becomes more and more over-optimized to the past data. ChatGPT doesn’t generalize well (as of now) and usually picks the best-performing version of the strategy and then looks out for the explanation of why it’s the best and tries to improve it even more. It’s logical (from the chatbot’s point of view), but it’s not the best idea if you want to build a robust trading strategy. Therefore, often, ChatGPT’s suggestions have a limited value, and it is usually better to prompt it to continue in different directions than it suggests. All in all, it’s better when a human is in charge than relying blindly on a chatbot during analysis.

Conclusion

Artificial intelligence is a powerful tool that can assist with many tasks. It’s good at suggesting top-down ideas, drafting code outlines for testing, and occasionally helping you find a new direction when you’re stuck on a problem. However, there are several important limitations to keep in mind. For instance, you still need to source your own data for analysis, carefully check the code for a lot of potential errors, and avoid fully trusting the performance metrics (and even charts) printed by the model without verification.

Since our previous article, AI has made significant progress. What it can do is help automate parts of the workflow and save some precious time. However, even with these advancements, the potential for errors remains high. That’s a risk that needs to be calculated when you try to work with it. AI is a classical tool, like a sharp knife – you can make a lot of useful things with it, or if you do not know what you are doing, then you can cut your own finger with it.

Authors:

David Belobrad, Quant Analyst, Quantpedia

Radovan Vojtko, Head of Research, Quantpedia

Are you looking for more strategies to read about? Sign up for our newsletter or visit our Blog or Screener.

Do you want to learn more about Quantpedia Premium service? Check how Quantpedia works, our mission and Premium pricing offer.

Do you want to learn more about Quantpedia Pro service? Check its description, watch videos, review reporting capabilities and visit our pricing offer.

Are you looking for historical data or backtesting platforms? Check our list of Algo Trading Discounts.

Would you like free access to our services? Then, open an account with Lightspeed and enjoy one year of Quantpedia Premium at no cost.

Or follow us on:

Facebook Group, Facebook Page, Twitter, Linkedin, Medium or Youtube

Share onLinkedInTwitterFacebookRefer to a friend